CUDA projects

CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model by NVidia. It provides C/C++ language extensions and APIs for working with CUDA-enabled GPUs.

CLion supports CUDA C/C++ and provides it with code insight. Also, CLion can help you create CMake-based CUDA applications with the New Project wizard.

CUDA projects in CLion

Before you begin, make sure to install CUDA Development Toolkit. For more information about the installation procedure, refer to the official documentation.

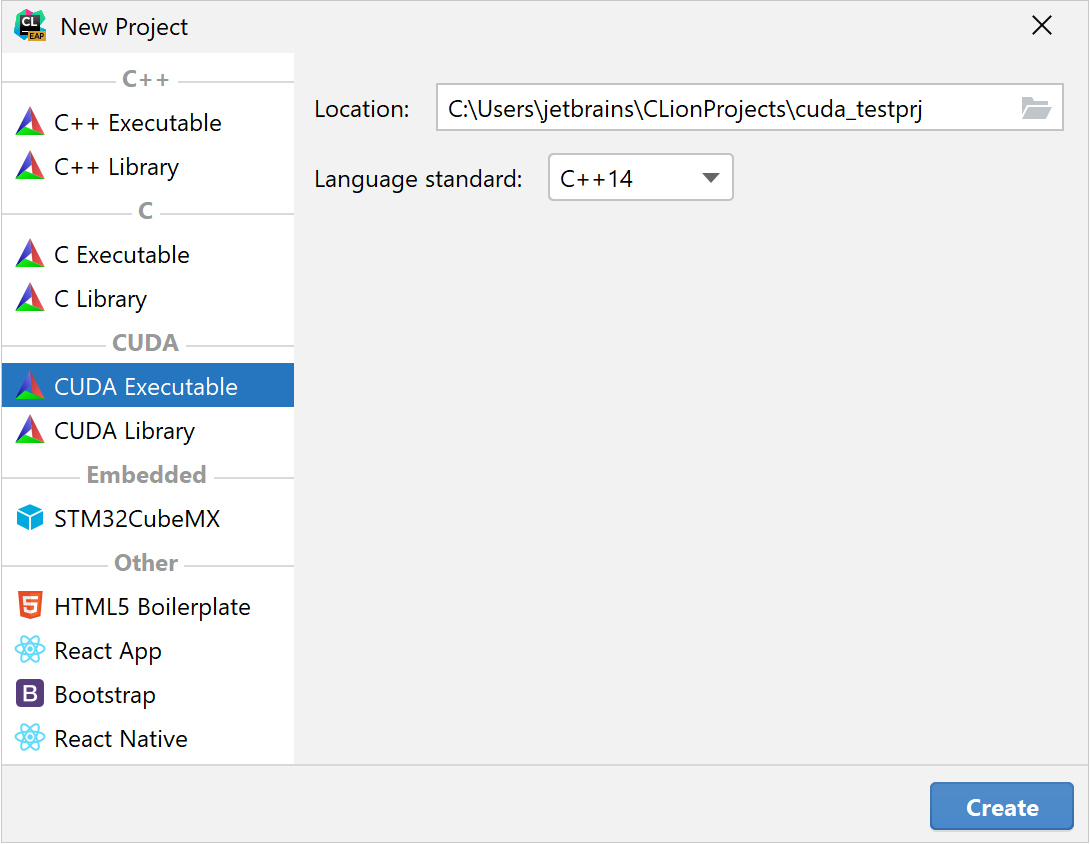

Create a new CUDA project

In the main menu, go to and select CUDA Executable or CUDA Library as your project type.

Specify the project location, language standard, and library type as required.

The selected standard will be set to the

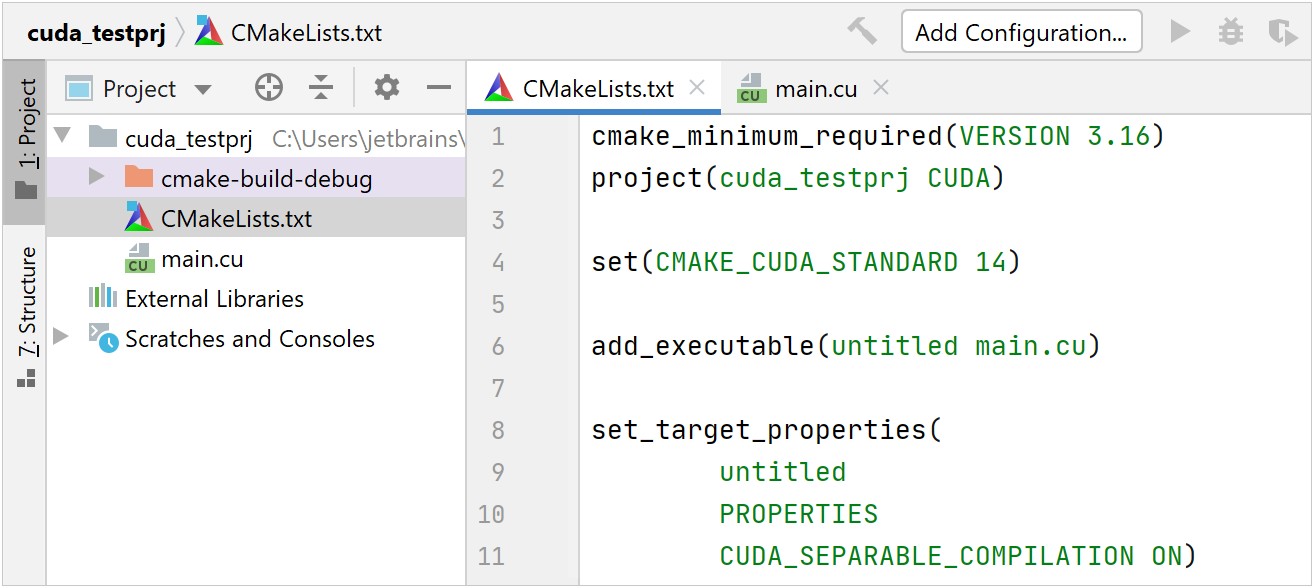

CMAKE_CUDA_STANDARDvariable. If you plan to add regular C/C++ files of another standard to your project, you will need to set theCMAKE_C_STANDARD/CMAKE_CXX_STANDARDvariable in the CMakeLists.txt script manually.Click Create, and CLion will generate a project with the sample CMakeLists.txt and main.cu:

Open an existing CUDA project

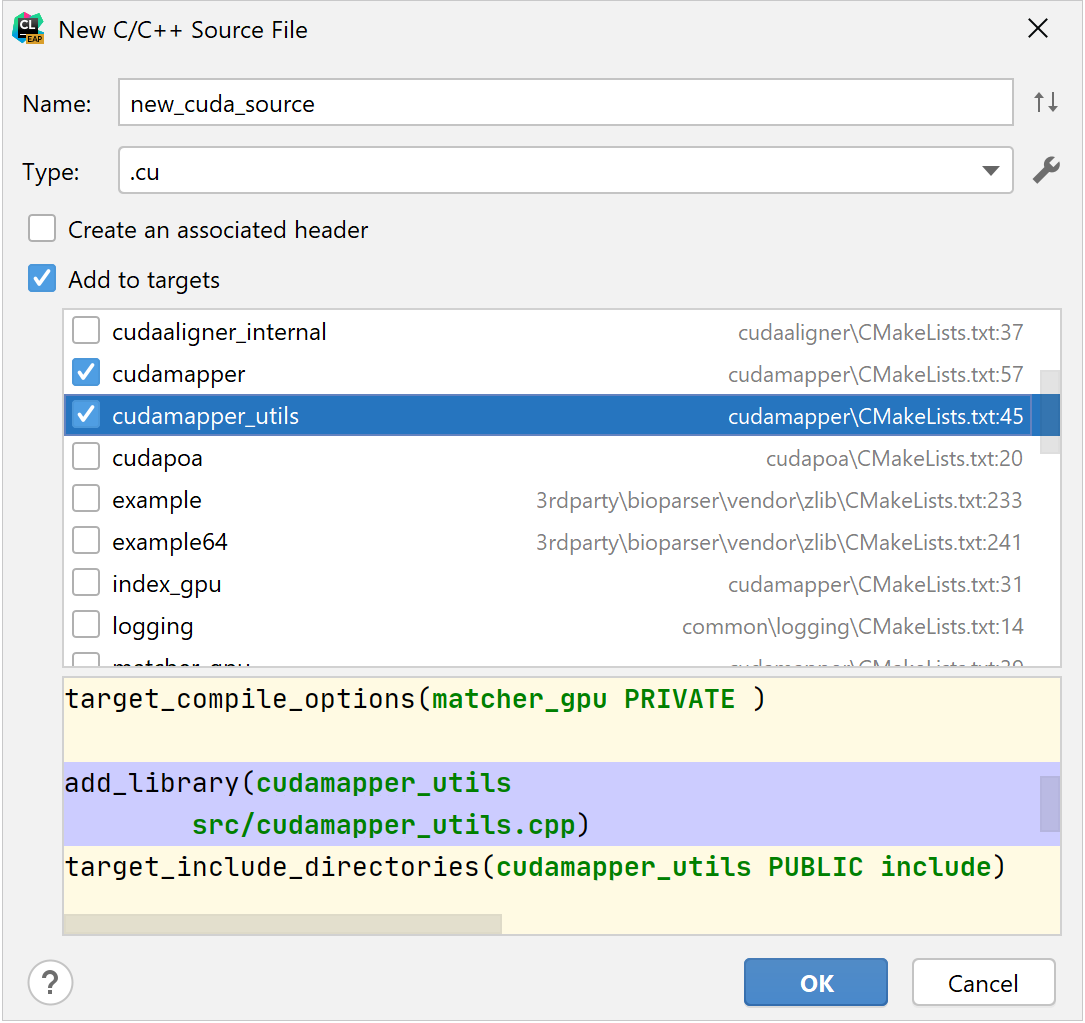

Add new .cu/.cuh files

Right-click the desired folder in the Project tree and select or .

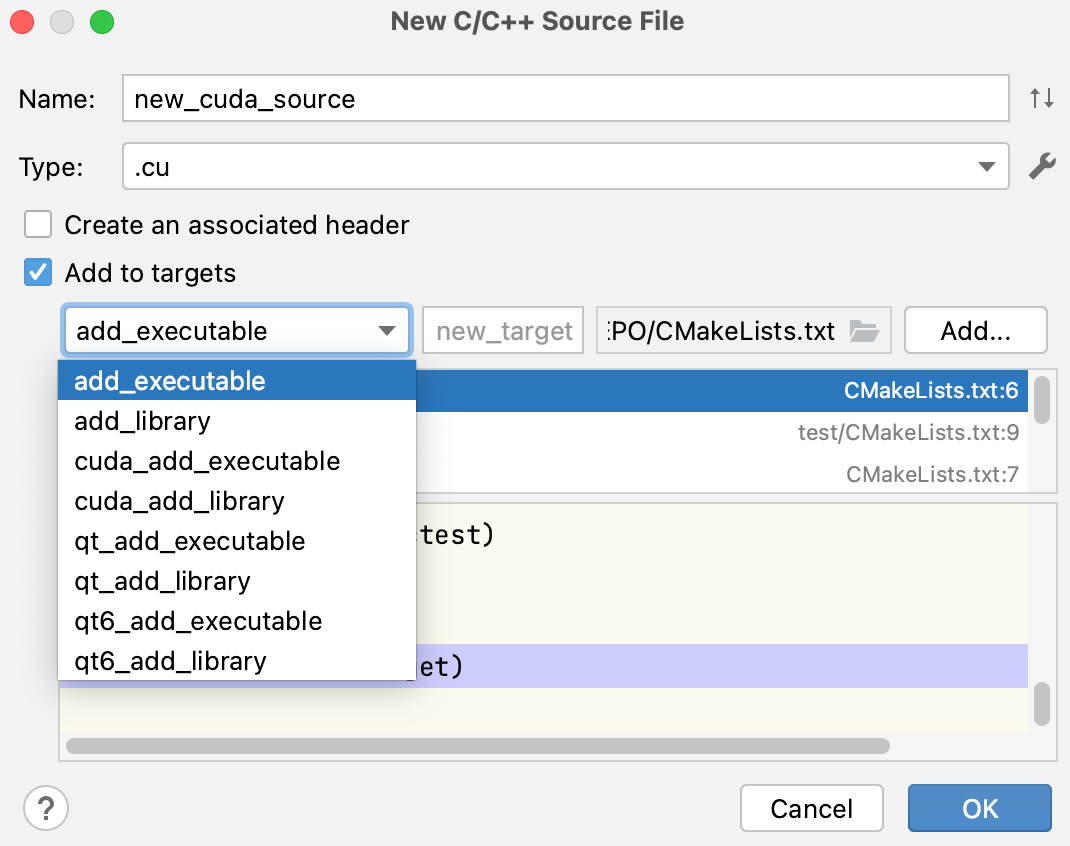

In the Type field, select .cu or .cuh for a CUDA source or CUDA header, respectively.

If you want the new file to be automatically added to one or more CMake targets, select the Add to targets checkbox and choose the required targets from the list.

The options will include both general CMake targets and the targets created with

cuda_add_executable/cuda_add_library(refer to CUDA CMake language).

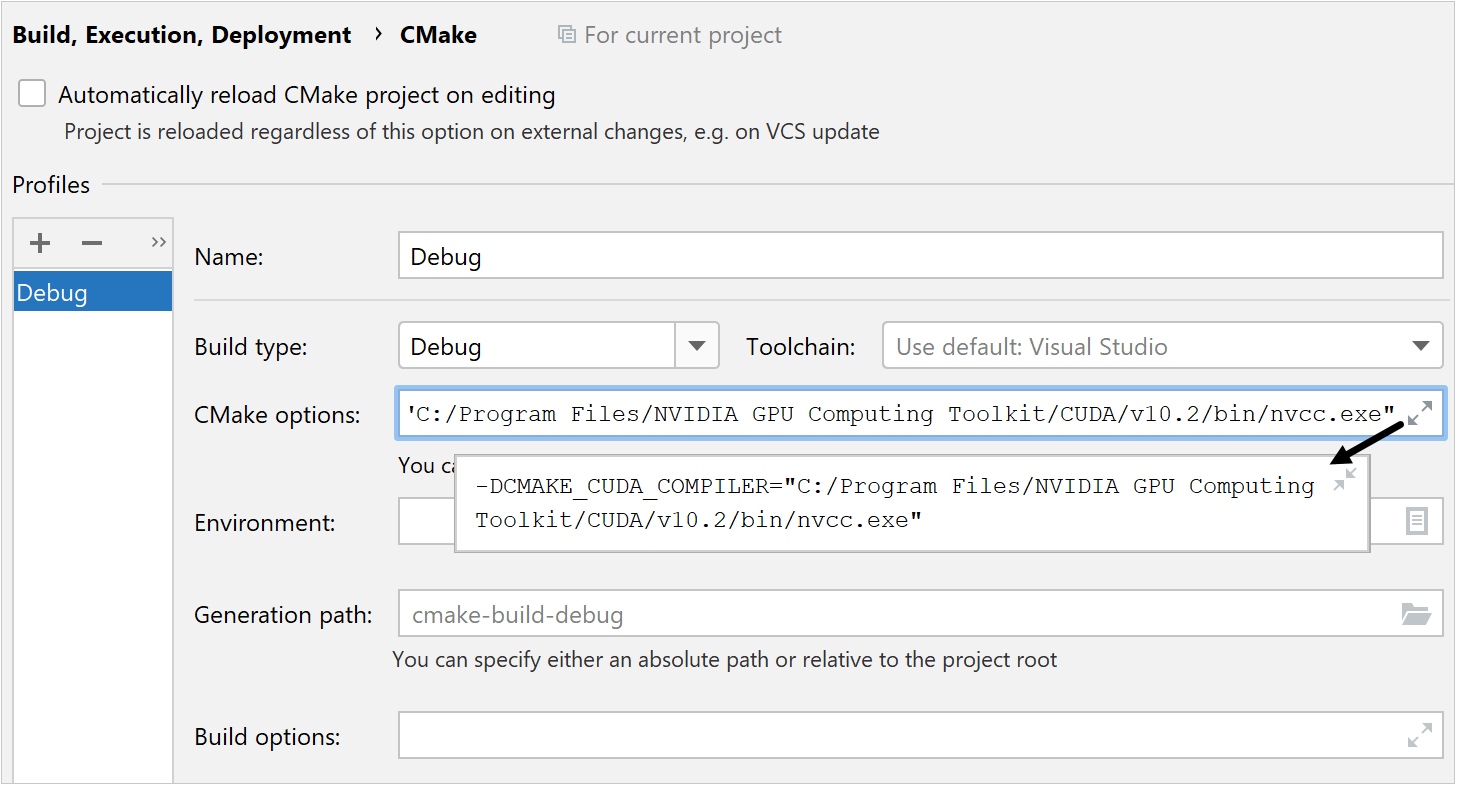

Set up the CUDA compiler

All the .cu/.cuh files must be compiled with NVCC, the LLVM-based CUDA compiler driver.

In order to detect NVCC, CMake should be informed on where to find the CUDA toolchain. Use one of the following options:

Set the CUDA toolchain path in the system

PATHvariable.On Linux, it is recommended that you add /usr/local/cuda-<version>/bin to

PATHin the /etc/environment configuration file. This way, the CUDA Toolkit location will be available regardless of whether you're working from the terminal, using a desktop launcher, or connecting to a remote Linux machine. For more information, refer to the official Installation Guide for Linux.Alternatively, specify the path to NVCC in CMake. One of the ways to do that is by setting the

CMAKE_CUDA_COMPILERvariable to the location of the NVCC executable.You can either add this variable to CMakeLists.txt or use the CMake options field in . For example, on Windows:

Configure a non-default host compiler

For compiling host code, NVCC calls the system's default C++ compiler (gcc/g++ on Linux and cl.exe on Windows). You can check the supported versions in the official guides for Windows and Linux.

If your system's default compiler is not compatible with your CUDA Toolkit, you can specify a custom compiler executable to be used by NVCC instead.

Choose one of the options:

Use the

CUDAHOSTCXXenvironment variableCUDAHOSTCXX=/path/to/compilerTo set it for the current project only, use the Environment field in .

To make the setting system-wide, add this variable in /etc/environment.

Use CMake variables

For CUDA projects that use CUDA as a language: CMAKE_CUDA_HOST_COMPILER and CMAKE_CUDA_FLAGS

For CUDA projects that use

find_package(CUDA): CUDA_HOST_COMPILER and CUDA_NVCC_FLAGS

Add the following lines to the CMake options field in :

-DCMAKE_CUDA_HOST_COMPILER=/path/to/compiler -DCMAKE_CUDA_FLAGS="-ccbin /path/to/compiler"Alternatively, set the variables in CMakeLists.txt.

set(CMAKE_CUDA_HOST_COMPILER "/path/to/compiler") set(CMAKE_CUDA_FLAGS "${CMAKE_CUDA_FLAGS} -ccbin /path/to/compiler")To use your toolchain's compiler, replace the path with

${CMAKE_CXX_COMPILER}:set(CMAKE_CUDA_HOST_COMPILER "${CMAKE_CXX_COMPILER}") set(CMAKE_CUDA_FLAGS "${CMAKE_CUDA_FLAGS} -ccbin ${CMAKE_CXX_COMPILER}")

On Windows, host compiler changes automatically when you switch between the Visual Studio installations in your MSVC toolchain.

CMake for CUDA projects

CUDA language

CUDA is supported as a language in CMake starting from version 3.8. Notice the following line at the beginning of the CMakeLists.txt script which CLion generates for a new CUDA project:

In this command, CUDA is specified for the LANGUAGES option (the LANGUAGES keyword is skipped to shorten the line).

As an example, you can write project(project_name LANGUAGES CUDA CXX) to enable both CUDA and C++ as your project languages.

NVCC compiler options

To specify compiler flags for NVCC, set the CMAKE_CUDA_FLAGS variable:

This way, the flags will be used globally for all targets.

Another approach is to set the flags for specific targets with the target_compile_options command. For instance:

Separable compilation

By default, NVCC uses the whole-program compilation approach, but you can enable separable compilation instead. This way, the components of your CUDA project will be compiled into separate objects.

You can control separable compilation via the CMAKE_CUDA_SEPARABLE_COMPILATION variable.

Add the

set(CMAKE_CUDA_SEPARABLE_COMPILATION ON)command to turn it on globally.Use the CUDA_SEPARABLE_COMPILATION property to enable it for a particular target:

set_target_properties( cuda_testprj PROPERTIES CUDA_SEPARABLE_COMPILATION ON)

Adding targets

When CUDA is enabled as a language, you can use regular add_executable/add_library commands to create executables and libraries that contain CUDA code:

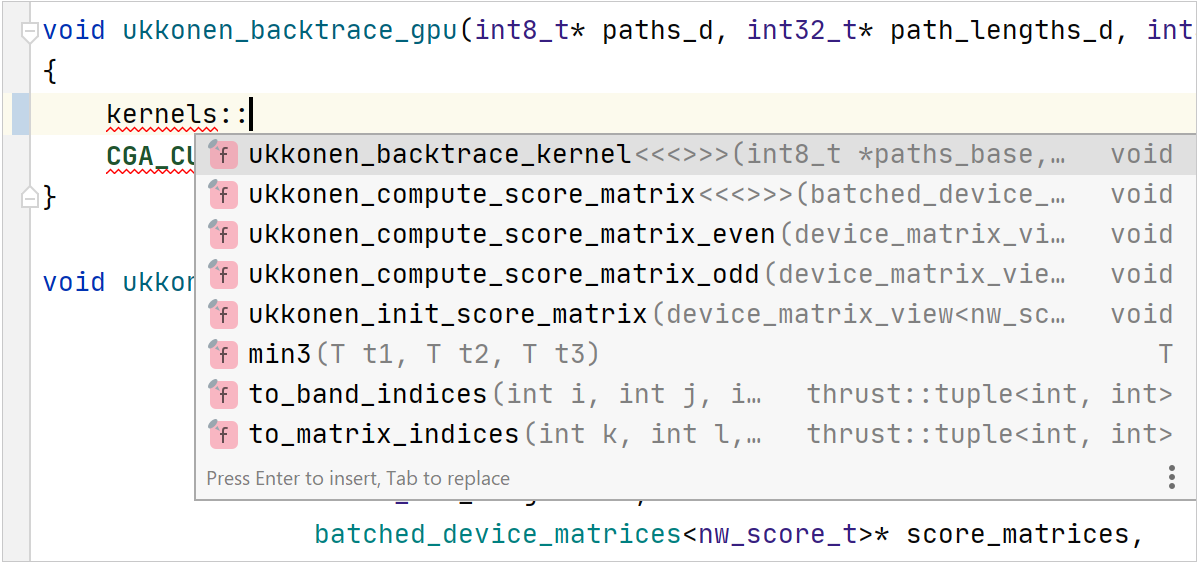

Another option is to add CUDA targets when adding new files. Click Add new target and then select the required command from the drop-down list:

CMake will call the appropriate compilers depending on the file extension.

Code insight for CUDA C/C++

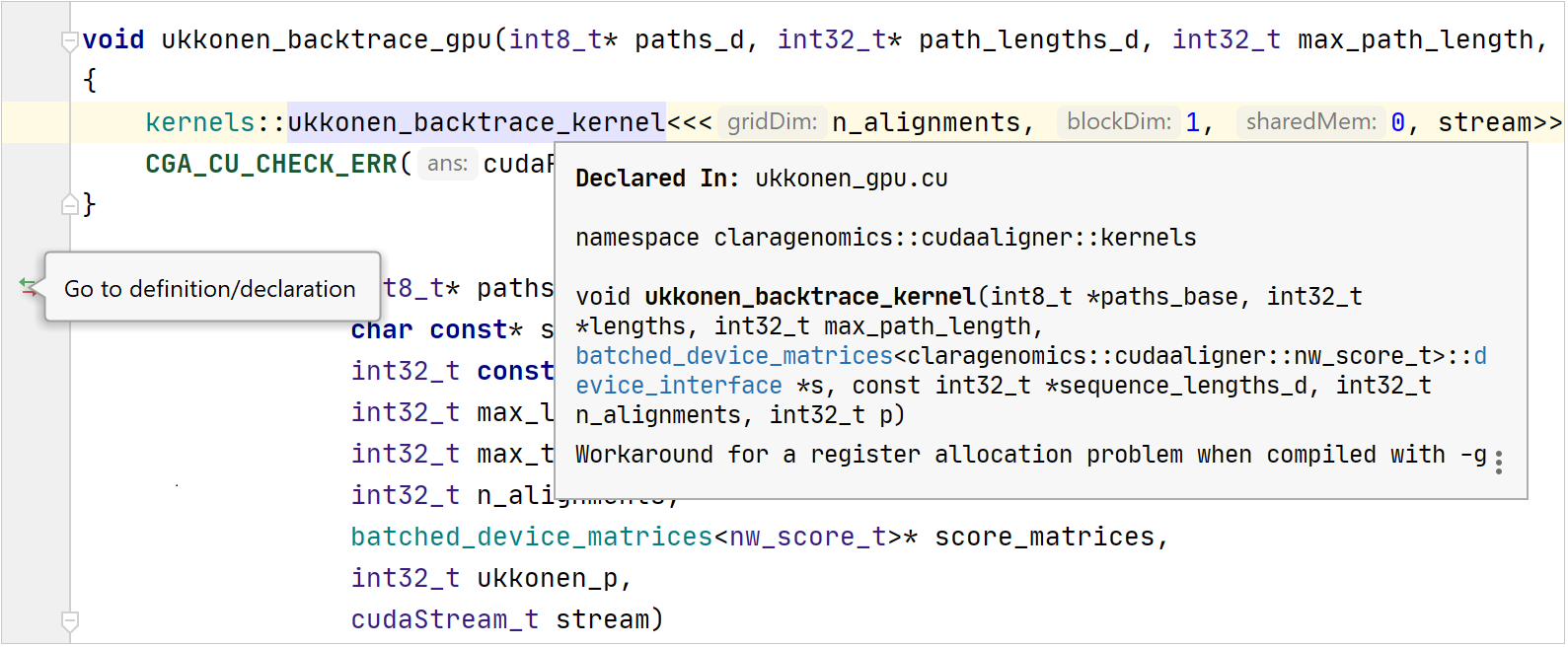

CLion parses and correctly highlights CUDA code, which means that navigation, quick documentation, and other coding assistance features work as expected:

In addition, code completion is available for angle brackets in kernel calls:

Debugging with cuda-gdb

On Linux, you can debug CUDA kernels using cuda-gdb.

Set cuda-gdb as a custom debugger

Go to and provide the path in the Debugger field of the current toolchain.

Use the

-Gcompiler option to add CUDA debug symbols:add_compile_options(-G). You can add this command in CMake options of your profile or in the CMakeLists.txt script.

Known issues and limitations

On Windows, the LLDB-based debugger, which CLion bundles for the MSVC toolchain, might have issues with CUDA code.

On macOS, CLion's support for CUDA projects has not been tested since this platform is officially unsupported starting from version 10.13.

Currently, Code Coverage, Valgrind Memcheck, and CPU Profiling tools don't work properly for CUDA projects.