Jest

Jest is a testing platform for client-side JavaScript applications and React applications specifically. Learn more about the platform from the Jest official website.

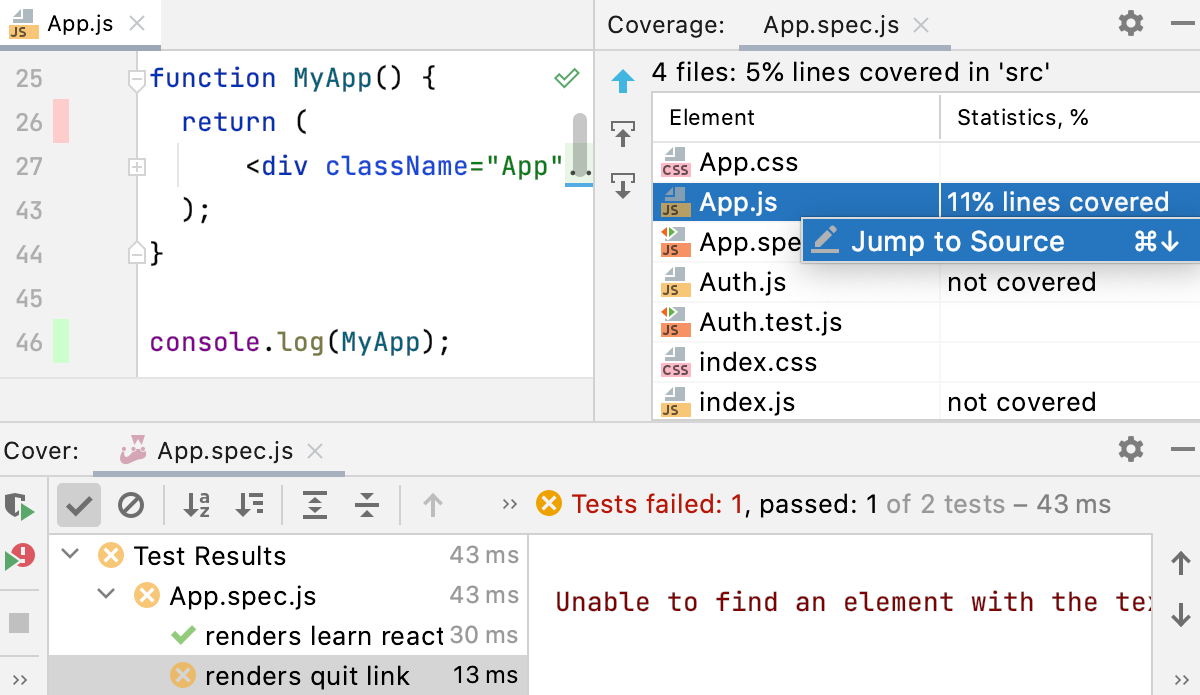

You can run and debug tests with Jest right in JetBrains Rider. You can see the test results in a treeview and easily navigate to the test source from there. Test status is shown next to the test in the editor with an option to quickly run it or debug it.

Before you start

Download and install Node.js.

Installing and configuring Jest

In the embedded Terminal (Alt+F12), type:

npm install --save-dev jestYou can also install the jest package on the Node.js and NPM page as described in npm, pnpm, and Yarn.

Learn more from Getting Started and Configuring Jest on the Jest official website.

Running tests

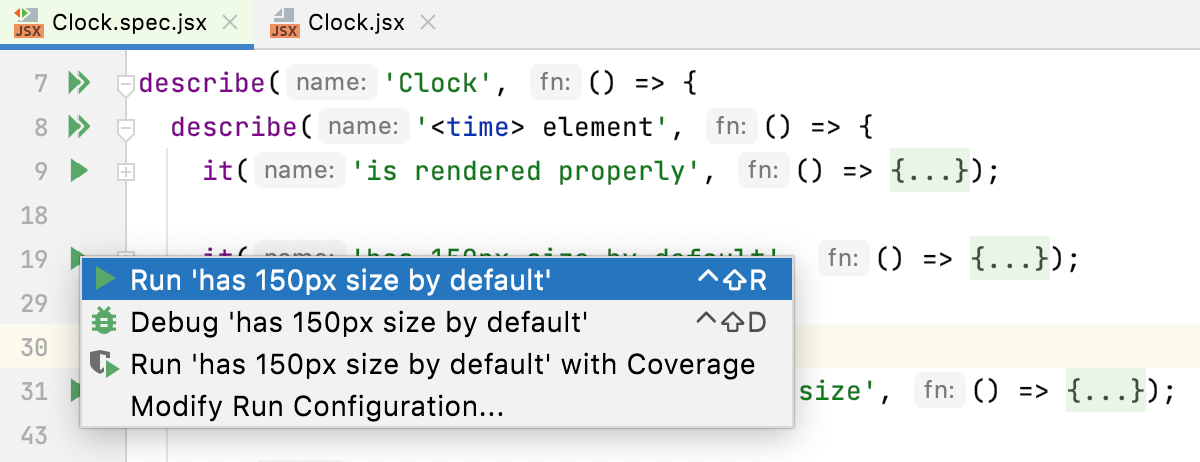

With JetBrains Rider, you can quickly run a single Jest test right from the editor or create a run/debug configuration to execute some or all of your tests.

To run a single test from the editor

Click

or

in the gutter and select Run <test_name> from the list.

You can also see whether a test has passed or failed right in the editor, thanks to the test status icons

and

in the gutter.

To create a Jest run configuration

Open the Run/Debug Configuration dialog ( on the main menu), click

in the left-hand pane, and select Jest from the list. The Run/Debug Configuration: Jest dialog opens.

Specify the Node.js interpreter to use. This can be a local Node.js interpreter or a Node.js on Windows Subsystem for Linux.

Specify the location of the jest, react-scripts, react-script-ts, react-super-scripts, or react-awesome-scripts package.

Specify the working directory of the application. By default, the Working directory field shows the project root folder. To change this predefined setting, specify the path to the desired folder or choose a previously used folder from the list.

Optionally specify the jest.config file to use: select the relevant file from the list, or click

and select it in the dialog that opens, or just type the path in the field. If the field is empty, JetBrains Rider looks for a package.json file with a

jestkey. The search is performed in the file system upwards from the working directory. If no appropriate package.json file is found, then a Jest default configuration is generated on the fly.Optionally configure rerunning the tests automatically on changes in the related source files. To do that, add the

--watchflag in the Jest options field.

To run tests via a run configuration

Select the Jest run/debug configuration from the list on the main toolbar and click

to the right of the list.

to the right of the list.The test server starts automatically without any steps from your side. View and analyze messages from the test server in the Run tool window.

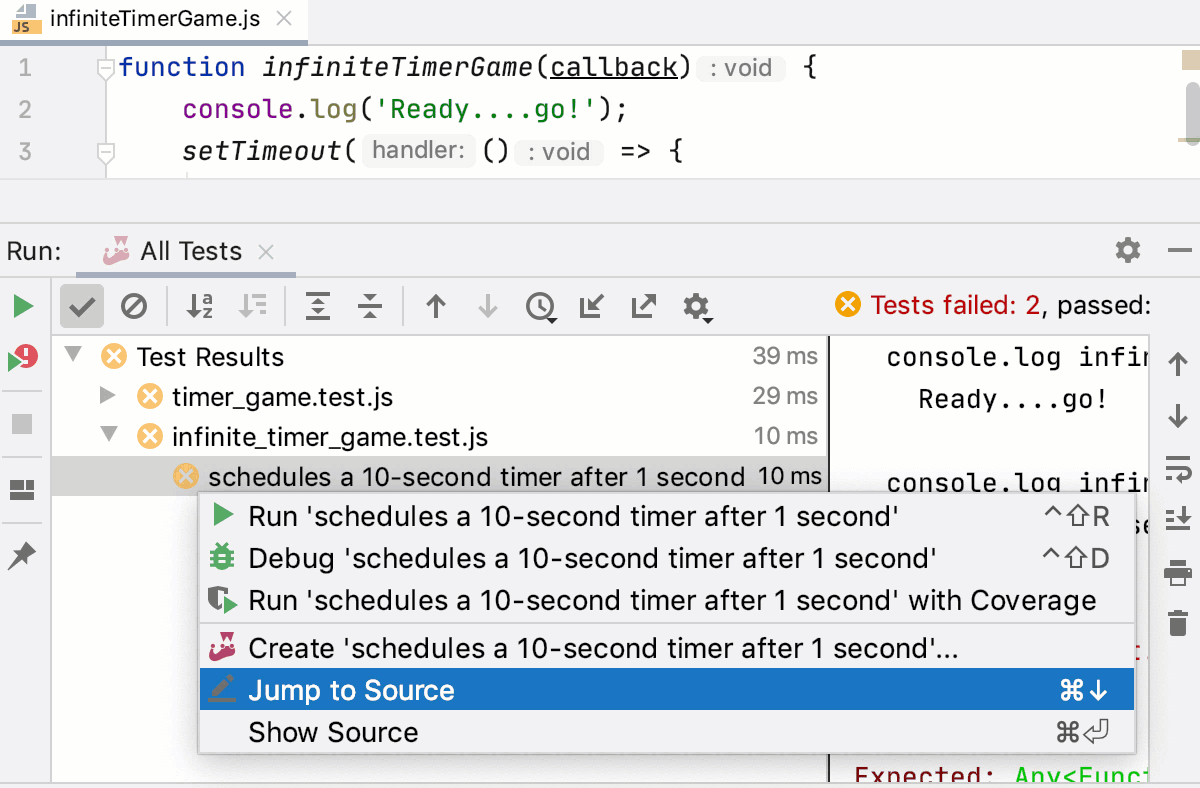

Monitor test execution and analyze test results in the Test Runner tab of the Run tool window, see Explore test results for details.

To rerun failed tests

In the Test Runner tab, click

on the toolbar. JetBrains Rider will execute all the tests that failed during the previous session.

To rerun a specific failed test, select on its context menu.

See Rerunning tests for details.

Navigation

With JetBrains Rider, you can jump between a file and the related test file or from a test result in the Test Runner Tab to the test.

To jump between a test and its subject or vice versa, open the file in the editor and select or from the context menu, or just press Ctrl+Shift+T.

To jump from a test result to the test definition, click the test name in the Test Runner tab twice or select from the context menu. The test file opens in the editor with the cursor placed at the test definition.

For failed tests, JetBrains Rider brings you to the failure line in the test from the stack trace. If the exact line is not in the stack trace, you will be taken to the test definition.

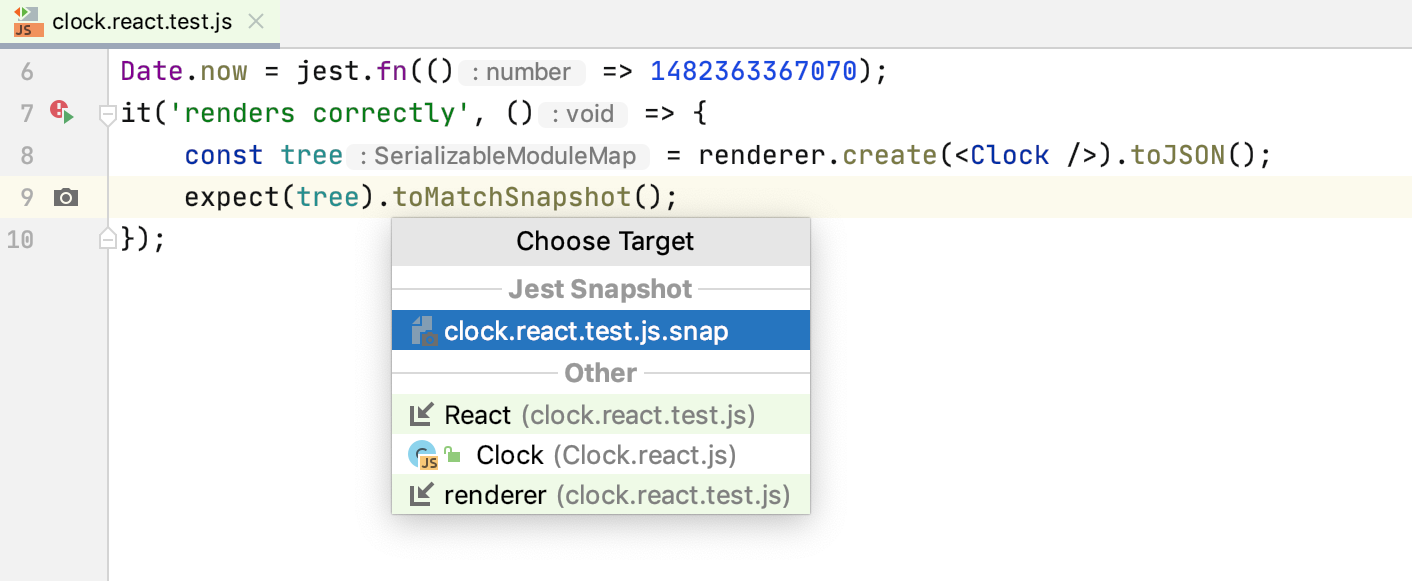

Snapshot testing

JetBrains Rider integration with Jest supports such a great feature as snapshot testing.

When you run a test with a .toMatchSnapshot() method, Jest creates a snapshot file in the __snapshots__ folder. To jump from a test to its related snapshot, click in the gutter next to the test or select the required snapshot from the context menu of the

.toMatchSnapshot() method.

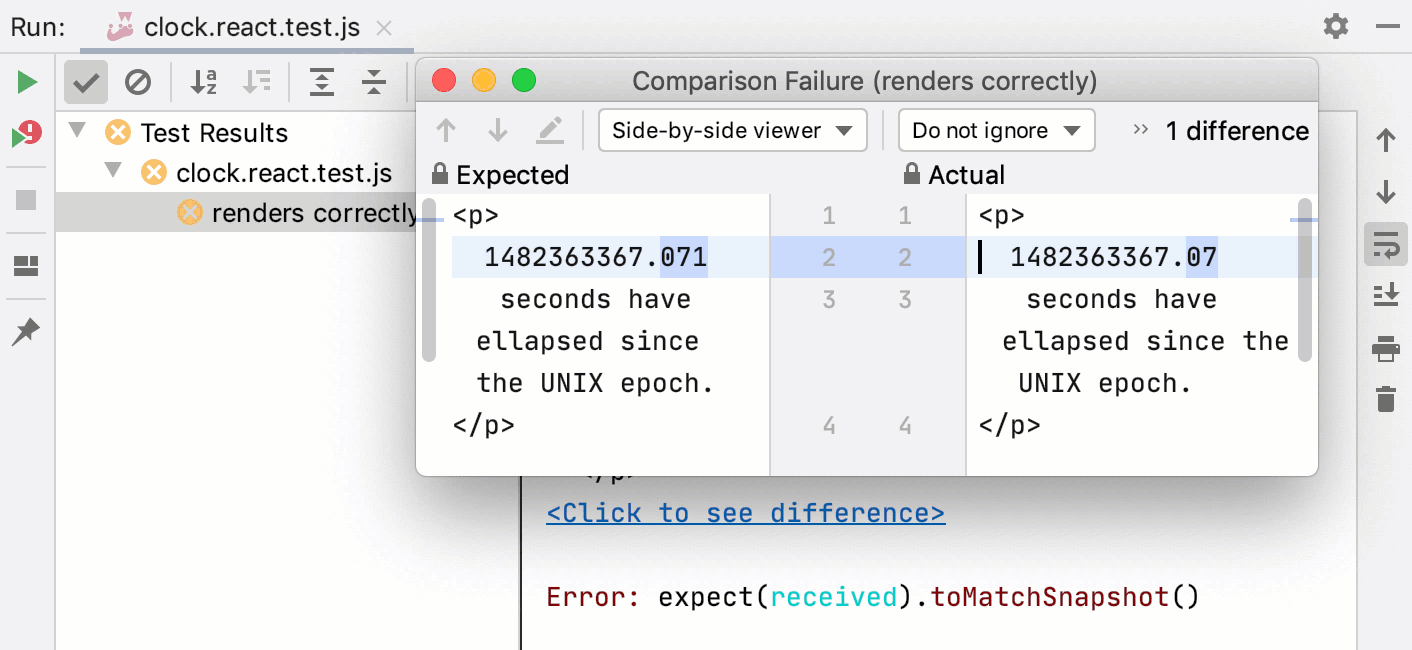

If a snapshot does not match the rendered application, the test fails. This indicates that either some changes in your code have caused this mismatch or the snapshot is outdated and needs to be updated.

To see what caused this mismatch, open the JetBrains Rider built-in Difference Viewer via the Click to see difference link in the right-hand pane of the Test Runner tab.

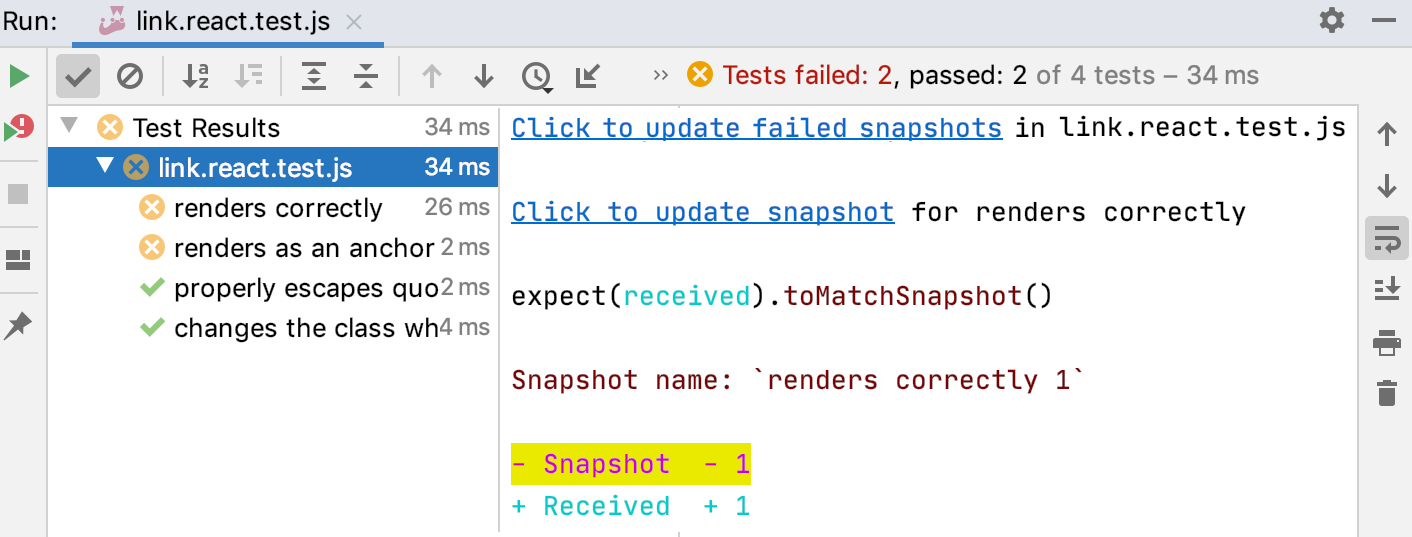

You can update outdated snapshots right from the Test Runner tab of the Run tool window.

To update the snapshot for a specific test, use the Click to update snapshot link next to the test name.

To update all outdated snapshots for the tests from a file, use the Click to update failed snapshots next to the test file name.

Debugging tests

With JetBrains Rider, you can quickly start debugging a single Jest test right from the editor or create a run/debug configuration to debug some or all of your tests.

To start debugging a single test from the editor, click

or

in the gutter and select Debug <test_name> from the list.

To launch test debugging via a run/debug configuration, create a Jest run/debug configuration as described above. Then select the Jest run/debug configuration from the list on the main toolbar and click

to the right of the list.

to the right of the list.

In the Debug window that opens, proceed as usual: step through the tests, stop and resume test execution, examine the test when suspended, run JavaScript code snippets in the Console, and so on.

Monitoring code coverage

With JetBrains Rider, you can also monitor how much of your code is covered with Jest tests. JetBrains Rider displays this statistics in a dedicated Coverage tool window and marks covered and uncovered lines visually in the editor and in the Project tool window.

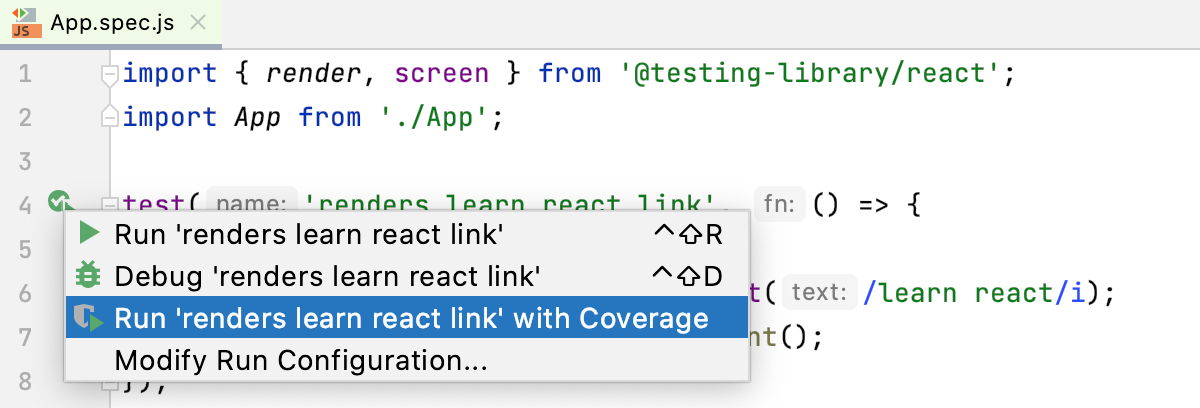

To run tests with coverage

Create a Jest run/debug configuration as described above.

Select the Jest run/debug configuration from the list on the main toolbar and click

to the right of the list.

Alternatively, quickly run a specific suite or a test with coverage from the editor: click

or

in the gutter and select Run <test_name> with Coverage from the list.

Monitor the code coverage in the Coverage tool window. The report shows how many files were covered with tests and the percentage of covered lines in them. From the report you can jump to the file and see what lines were covered – marked green – and what lines were not covered – marked red: