Create a Multi-Job Pipeline

This tutorial demonstrates how to create a sequence of dependent Jobs and pass files produced by one Job into another.

Fork the sample Gradle/Docker Pipeline repository to your GitHub account. This repository contains a Gradle project that builds a Spring Boot application, and a Dockerfile for making a Docker image.

Click New Pipeline... and wait for TeamCity to use the OAuth connection you provided to scan a VCS and display the list of available repositories. Click the forked repository to create a new Pipelines project.

Open the Job settings, expand the automatically created Step 1 and change its type from Script to Gradle.

Type

clean build -x testin the Tasks field.

Gradle recommends running builds with the help of the Gradle Wrapper — a script that allows builds to utilize the specific version of Gradle and, if no such version is present, download it before the target build starts. Ensure the Use Gradle Wrapper option in the Step settings section is enabled to tell TeamCity it should look for the Wrapper inside the repository.

tip

In our sample project, all Wrapper-related files have default names and locations. TeamCity detects and employs such Wrappers automatically. To specify a custom location, enter a path to the Gradle Wrapper path field.

Enter

build/libs/to the Artifacts field to tell TeamCity it should publish this folder with the resulting .jar file when the Gradle Step finishes.

Save and run your Pipeline and ensure it finishes successfully. Note that published files are now available from the Artifacts tab of the build results page.

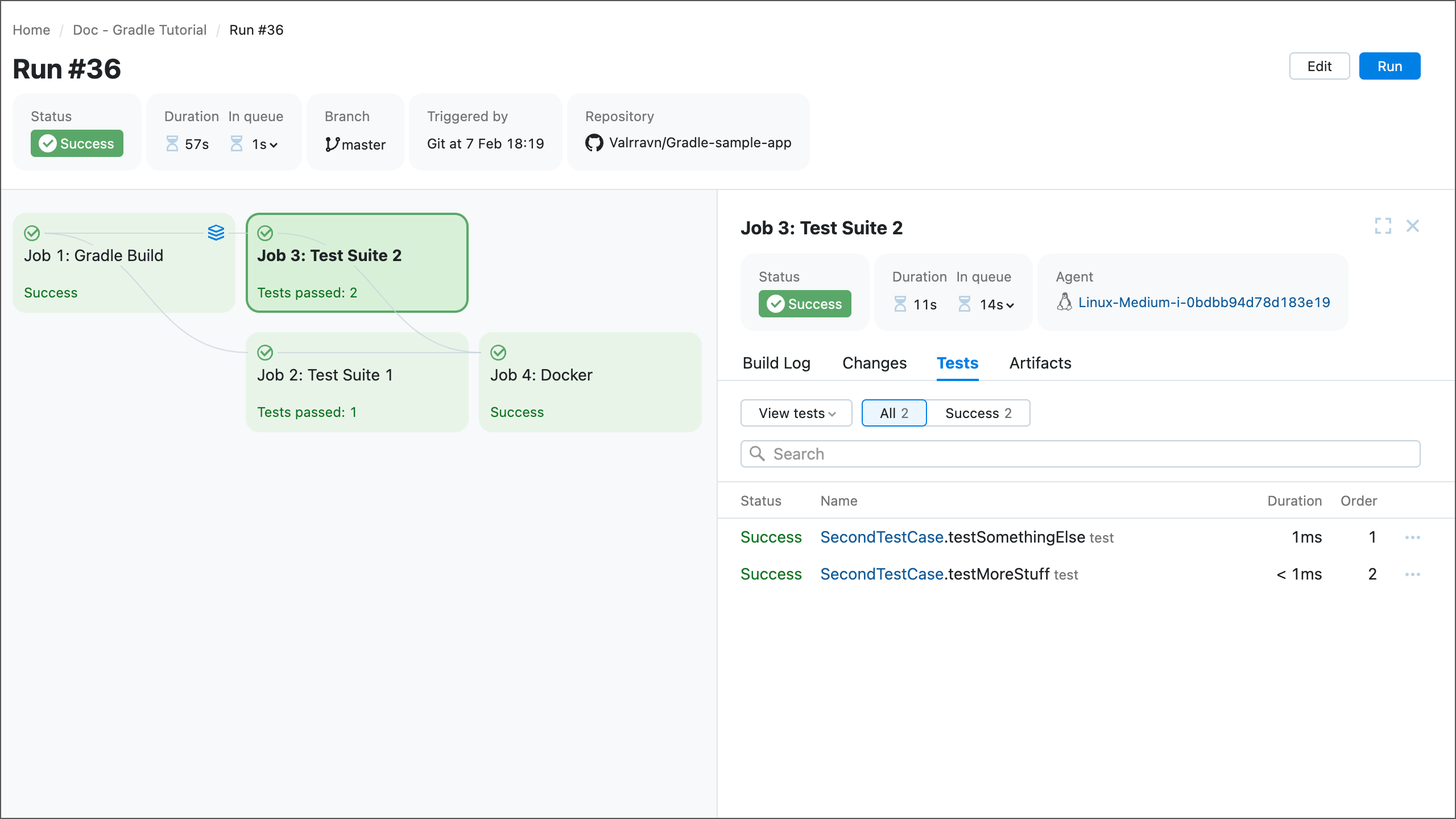

Our sample project has two modules with tests, each with its own set of Gradle instructions inside build.gradle files. With Pipelines, you can add different Jobs that run these test sets simultaneously.

On the main page of your Pipeline settings, click Add to create a new Job.

In the Dependencies section of the new Job's settings, tick a checkbox next to the first Job's name. By doing so you specify that the new Job should start only after the first Job finishes. Running tests does not require any files produced on the building stage of our Pipeline, so you can switch the selector to Ignore artifacts.

In Step settings, choose Gradle as the Step type and type

clean testin the Tasks field.All new Steps have "repository root" as the default Working directory setting. A working directory is a path on a build agent's local storage that stores files fetched from a remote repository. Whenever you run a Step, it uses files from this local repository copy. If you need to point a Step to the specific directory instead of the entire repository root folder, specify a required directory path (relative to the root directory). In our case, type

test1in the Working directory field.

To manually select a Gradle build file, type a path to this file in the corresponding field. Note that the path must be relative to the current working directory. For instance, since our working directory is “test1”, you can type

build.gradleand TeamCity will use the correcttest1\build.gradlefile rather than a similar file from the project root folder that Job #1 utilizes.Similarly to the first created Job, ensure the Use Gradle Wrapper setting is on to let TeamCity look for Wrapper files inside the "test1" directory. The Gradle wrapper path should remain empty since this file resides at the default location.

Repeat steps 1 to 6 to create another Job, but use

test2instead oftest1when setting the Working directory. This directory contains its ownbuild.gradleand Gradle Wrapper files, so other settings should remain the same.You now have two Jobs that can start as soon as the first Job finishes building the application. The visual graph splits the sequence of Jobs into separate branches to illustrate this setup. Save and run your Pipeline to ensure both testing Jobs run their Steps simultaneously.

Now that your project is built and tested, we can add a final Job that uses a file produced by the first Job to build and publish a Docker image.

On the main Pipeline page, click Add... to create a new Job.

Since our new Job should be the last one to run, tick both testing Job names under the Dependencies section.

tip

Job 3 prevents this Job from starting since it contains tests that fail by design. If a Job fails, TeamCity fails all subsequent Jobs that depend on it.

To change that, either set your final Job to depend only on Job 2 (in this case failing Job 3 will not affect the Docker image building stage), or modify the source code (in your own forked repo) so that Job 3 tests are successful.

Selecting the two testing Jobs in Dependencies is enough to put our new Job to the end of the Pipeline. However, to build a Docker image it requires a .jar file produced by the very first Job. To give our new Job access to this file, tick the first Job in Dependencies and ensure the Use artifacts mode is on. Adding this last dependency does not change the order in which Jobs are launched, but ensures the file from Job #1 is shared with Job #4.

Leave the Step type as Script and type the following command as the script body:

docker build --pull --file ./docker/Dockerfile --tag myusername/myrepositoryname:mycustomtag .. This script runs thedocker buildcommand with the following parameters:--pull — Attempts to pull a newer version of the image.

--file — The path to the Dockerfile.

--tag — The name for your build Docker image.

. — Sets the agent checkout directory as the context for the

docker buildcommand.

To publish your built image, use the following command:

docker push myusername/myrepositoryname:mycustomtag. You can create another Job that runs it, add a separate Step to the final Job, or simply add this line to the existing Step.If you run the Pipeline now, it will fail because of the

docker pushcommand: you can pull public images anonymously, but to push images you need to be logged in. To do so, you need to provide credentials of a user with the "Write" repository permission.In Job settings, expand the Integrations section, click Add | Docker Repository, and enter valid user credentials.

tip

You can add integrations inside individual Jobs or on the Pipeline level (inside the Pipeline settings pane).

Integrations created inside a Pipeline settings pane are shared across all Jobs but are initially disabled. To allow a Job to use a required integration, expand the Job's Integrations section and toggle it on.

Integrations created inside a Job settings pane are also available for all Jobs in this Pipeline, but they are instantly active for the Job whose settings are currently edited.

Save the Pipeline and run it. Check your DockerHub repository to ensure the last Job uploads a Docker image.

You now have a Pipeline that builds and tests your sample application, builds a Docker image from it, and publishes this image to the Docker Hub. As an additional customization, you may want to replace plain string values in your Script step with references to parameters.

Open Pipeline settings and add two new Parameters:

DImageName— stores the name of your Docker image.DRepoName— stores your Docker Hub registry name in theyour_user_name/repository_nameformat.

You can now avoid repetitively entering image and registry names, and instead use the

%parameter_name%syntax to reference parameters that store these values. In our case, you can modify the script of the last Job.docker build --pull --file ./docker/Dockerfile --tag %\DRepoName%:%\DImageName%-%\build.number% . docker push %\DRepoName%:%\DImageName%-%\build.number%

Note that the updated script uses a reference to the

build.numberparameter. This is a predefined TeamCity parameter that stores the number of the current build.

The final Pipeline should have the following YAML configuration. You can go to Pipeline settings, switch the editing mode from Visual to YAML, and compare your current settings with this reference configuration to check whether some of your settings are missing or have different values.

name: Doc - Gradle Tutorial

jobs:

Job1:

name: 'Job 1: Gradle Build'

steps:

- type: gradle

tasks: clean build -x test

use-gradle-wrapper: 'true'

runs-on: Linux-Medium

files-publication:

- build/libs/

Job1_2:

name: 'Job 2: Test Suite 1'

runs-on: Linux-Medium

steps:

- type: gradle

working-directory: test1

tasks: clean test

build-file: build.gradle

use-gradle-wrapper: 'true'

dependencies:

- Job1

Job1_3:

name: 'Job 3: Test Suite 2'

runs-on: Linux-Medium

steps:

- type: gradle

working-directory: test2

tasks: clean test

build-file: build.gradle

use-gradle-wrapper: 'true'

dependencies:

- Job1

Job4Docker:

name: 'Job 4: Docker'

runs-on: Linux-Medium

steps:

- type: script

script-content: >-

docker build --pull --file ./docker/Dockerfile --tag

%DRepoName%:%DImageName%-%build.number% .

docker push %DRepoName%:%DImageName%-%build.number%

dependencies:

- Job1:

files:

- build/libs/

- Job1_2

- Job1_3

integrations:

- Docker

parameters:

DImageName: SpringBoot

DRepoName: username/registry_nameThanks for your feedback!