Spark Submit run configuration

With the Spark plugin, you can execute applications on Spark clusters. IntelliJ IDEA provides run/debug configurations to run the spark-submit script in Spark’s bin directory. You can execute an application locally or using an SSH configuration.

Install the Spark plugin

This functionality relies on the Spark plugin, which you need to install and enable.

Press Ctrl+Alt+S to open settings and then select .

Open the Marketplace tab, find the Spark plugin, and click Install (restart the IDE if prompted).

Run an application with the Spark Submit configurations

Go to . Alternatively, press Alt+Shift+F10, then 0.

Click the Add New Configuration button (

) and select .

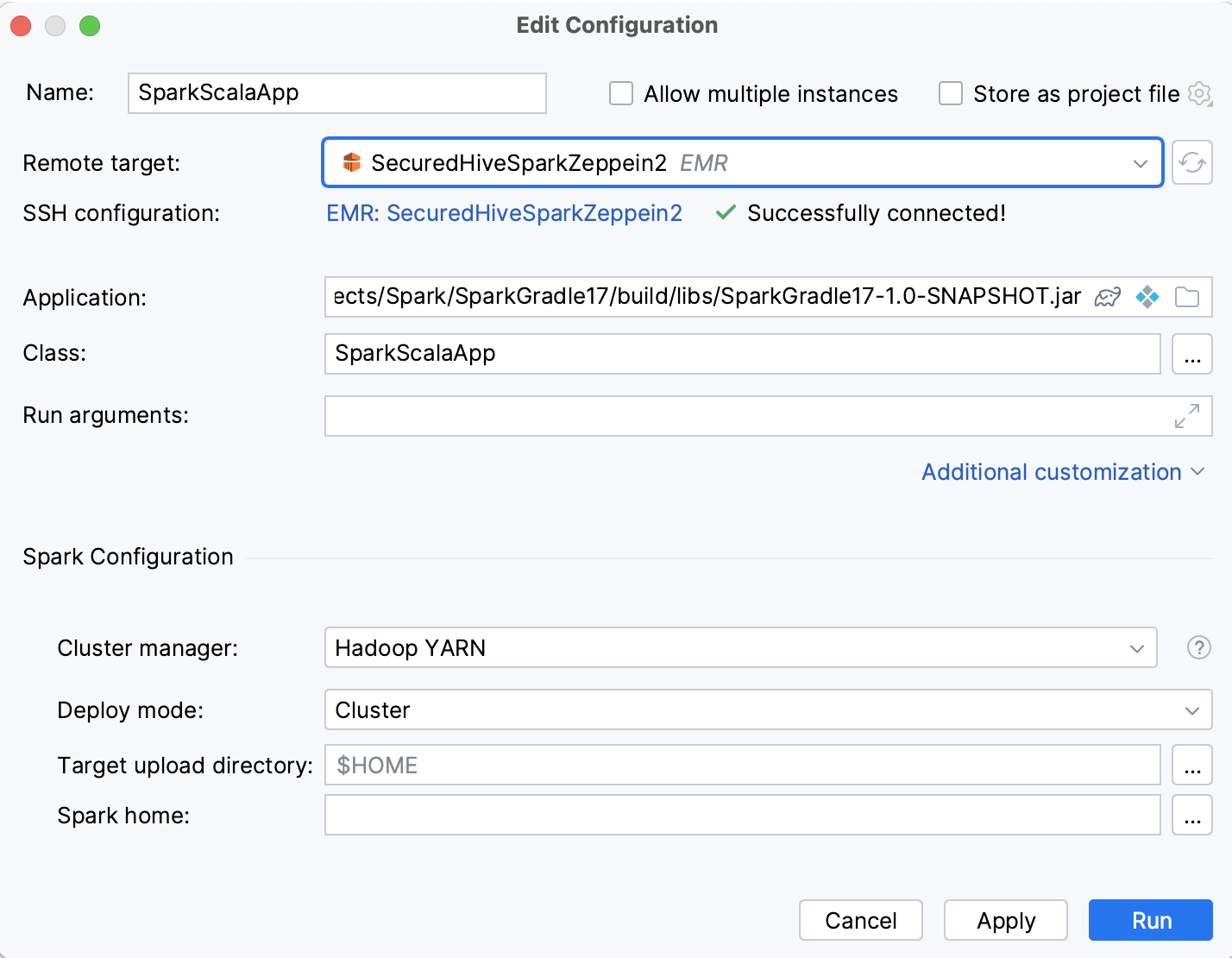

Enter the run configuration name.

In the Remote target list, do one of the following:

If you have connected to an AWS EMR cluster, you can upload your application on it.

If you have SSH configurations, you can use them to submit applications to a custom remote server.

Otherwise, click Add EMR Connection or Add SSH Connection.

In the Application field, select a way to obtain the application file:

Click

to select a Gradle task and artifact.

Click

to select an IDEA artifact.

Or click

to upload a JAR or ZIP file from you local machine.

In the Class field, type the name of the main class of the application.

You can also specify optional parameters:

Run arguments: arguments to run the application.

Under Spark Configuration, set up:

Cluster manager: select the management method to run an application on a cluster. SparkContext can connect to several types of cluster managers (either Spark’s own standalone cluster manager, Mesos, or YARN). See more details in the Cluster Mode Overview.

Deploy mode: cluster or client.

Target upload directory: the directory on the remote host to upload the executable files.

Spark home: a path to the Spark installation directory.

Configs: arbitrary Spark configuration property in key=value format.

Properties file: the path to a file with Spark properties.

Spark Debug (available for Java and Scala applications):

Start Spark driver with debug agent: Start the JDWP agent when you launch this run/debug configuration. This will allow you to connect to a debugger after running the application. Disabling the option disables the debug mode of this configuration.

Listening port: specify a debugger port on the server. Leave empty to dynamically assign an available port at runtime.

Suspend driver: Suspend the Spark driver process until the debugger is attached (

suspend=yJDWP agent option). Applied only in debug mode. In run mode,suspend=nis always used.

Under Dependencies, select files and archives (jars) that are required for the application to be executed.

Under Maven, select Maven-specific dependencies. You can add repositories or exclude some packages from the execution context.

Under Driver, select Spark Driver settings, such as amount of memory to use for the driver process. For the cluster mode, it is also possible to specify the number of cores.

Under Executor, select executor settings, such as the amount of memory and the number of cores.

Kerberos: settings for establishing a secured connection with Kerberos.

Shell options: select if you want to execute any scripts before the Spark submit.

Enter the path to bash and specify the script to be executed. It is recommended to provide an absolute path to the script.

Select the Interactive checkbox if you want to launch the script in the interactive mode. You can also specify environment variables, for example,

USER=jetbrains.Advanced Submit Options:

Proxy user: a username that is enabled for using proxy for the Spark connection.

Driver Java options, Driver library path, and Driver class path: add additional driver options. For more information, refer to Runtime Environment.

Archives: comma-separated list of archives to be extracted into the working directory of each executor.

Print additional debug output: run spark-submit with the

--verboseoption to print debugging information.

Click OK to save the configuration. Then select configuration from the list of the created configurations and click

.

Inspect the execution results in the Run tool window.

See also Create and run Spark application on cluster for an example on how to use this run configuration and monitor the execution results.