Security

JetBrains Trust Center

Our Trust Center provides comprehensive information on:

our infrastructure security

organizational security policies and data privacy procedures

non-public documents: SOC2 report, penetration testing reports, etc.

answers to frequently asked questions.

CVE assessment and response

We perform an automated scanning of the OCI images we ship to the customers on a regular basis together with an impact assessment of the results of such scans.

We consider Critical CVEs as a ground to release a new minor version of Datalore, as long as our assessment identifies that the attack vector of the CVE in question is applicable to any of the user-interactable parts of Datalore.

However, not all the vulnerabilities provide an attacker with a vector to compromise Datalore components; for example, the command line argument parser of the CLI tool shipped with Datalore notebook agent might be vulnerable — but this does not affect the Datalore agent itself directly. Moreover, this tool can be easily uninstalled or upgraded by modifying the baseline image.

Nevertheless, due to the gap in the Datalore's release cycle, there's a chance that you might be able to detect the CVE which has not been addressed yet in the current stable release of Datalore. That said, we still address less-impactful vulnerabilities, but within our planned release cycle.

If you believe that you have identified a critical vulnerability, that might impact your operations - please, contact our Support.

Sensitive data flow within Datalore

By its nature, Datalore can be in possession of sensitive data if it is instructed to connect to such a data source (database or object storage).

However, only three Datalore components can technically process such data: notebook agents, SQL session runtimes, and notebook outputs. For a more detailed explanation, continue reading this block.

The following diagrams describe four different data flows where external sensitive data (like database contents or private keys) are involved.

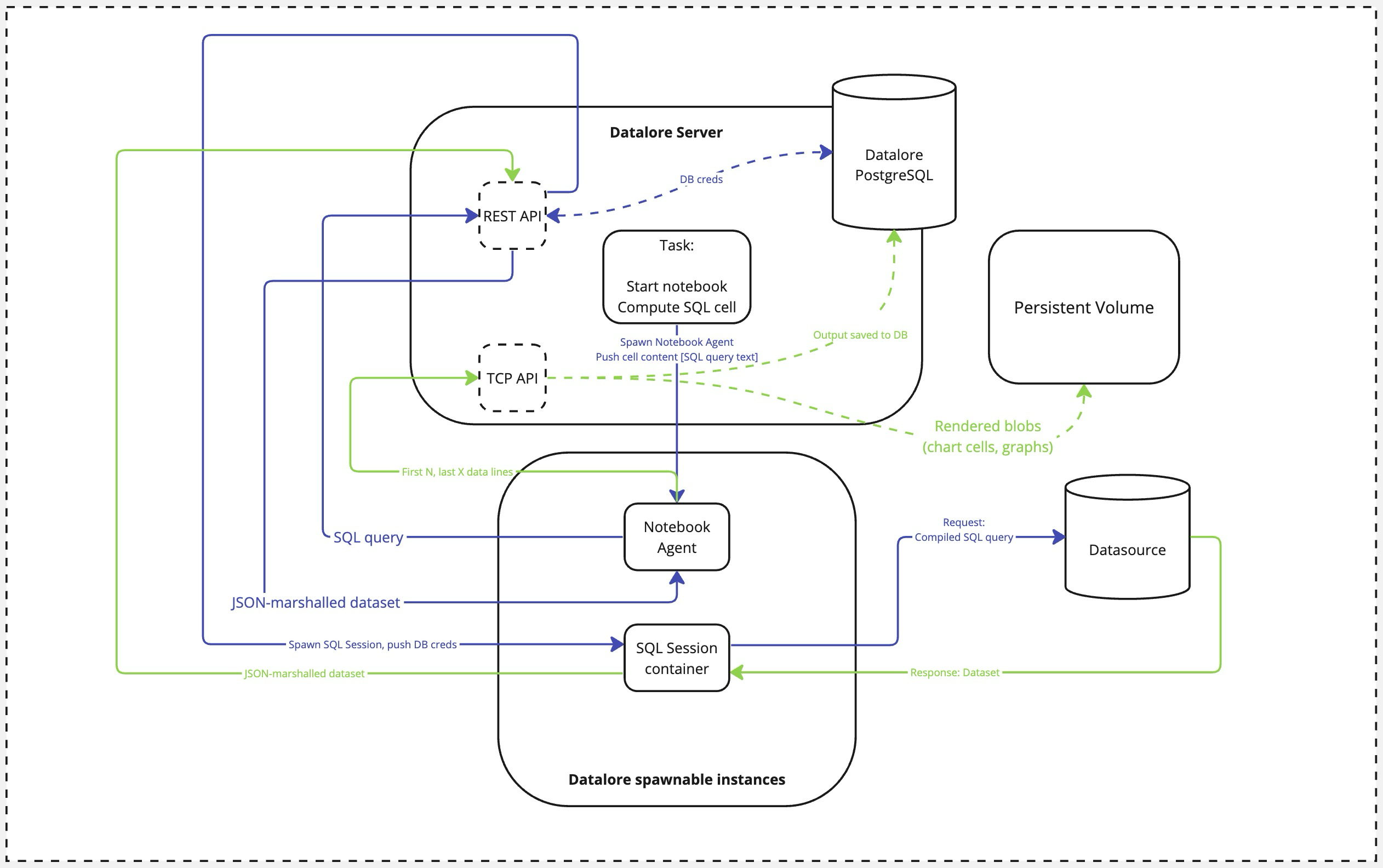

SQL cell execution data flow

As one of the core concepts in Datalore, the notebook can interact with a remote data source if instructed by the user. For example, a Datalore authenticated user modifies the source code by adding an SQL statement within an SQL cell connected to a pre-configured database connection.

An external actor calls an SQL cell computation event. This is an event triggered by user (or by user intention via Run notebooks using API).

Datalore spawns a new Notebook Agent container.

The Notebook Agent contacts Datalore's REST API, passing over the SQL query string.

Datalore requests the database credentials and spawns an SQL Session container, passing over these credentials and the SQL query.

The SQL Session compiles the query (performing all the variable substitutions according to the selected SQL dialect) and queries the database.

Once the full dataset is fetched, the SQL Session container converts it to JSON and sends back to the Datalore's REST API.

REST API returns the calculated result to the notebook.

During the task teardown, the Notebook Agent caches the first N and the last X values (those are currently non-configurable and set to 100 each) to the database over the Datalore's TCP API. This slice of the dataset is saved to Datalore's PostgreSQL database. Additionally, all the rendered blobs (like generated images or chart cell outputs) are saved to Datalore's persistent storage using the same TCP API.

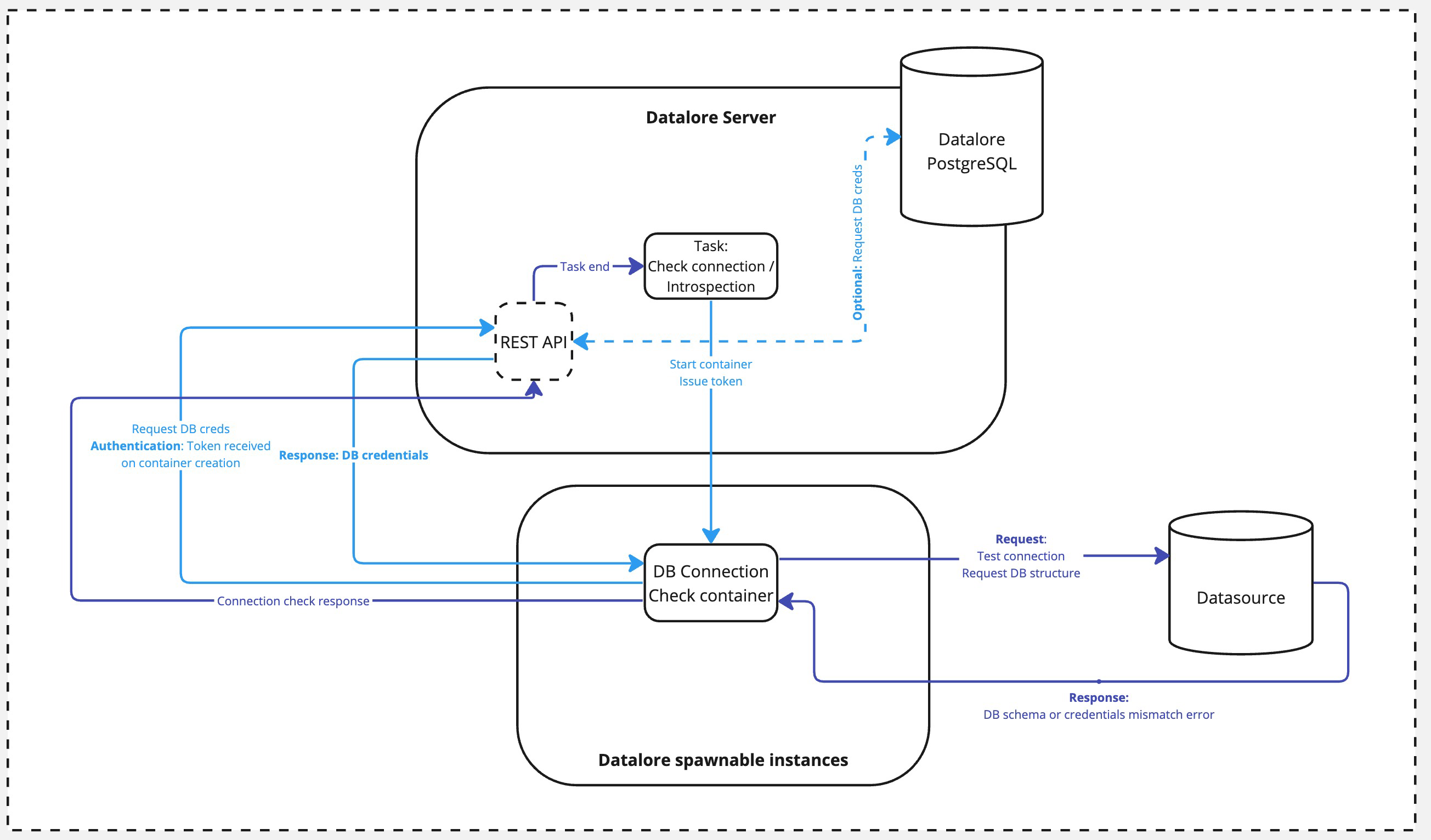

Database introspection data flow

Apart from explicitly provided and executed SQL queries within the notebook context, Datalore will also perform a database introspection as a background task to improve user experience when using SQL cells.

An external actor calls an introspection task. This could be either a UI action by the user or the Datalore server itself as part of routine maintenance or cache update flow.

A DB Connection Check container is spawned. At this step, the Server issues a one-time token for the container.

Once spawned, the container calls Datalore's REST API and authenticates with the token from the previous step. The credentials are either requested from the database (if connected already) or taken from the user input (if triggered by a UI action and there is no connection established yet).

The credentials are passed over to the Connection Check container, and it performs the database connection with the received credentials.

The response is passed back to the Datalore's REST API. Once completed, the Connection Check container is shut down.

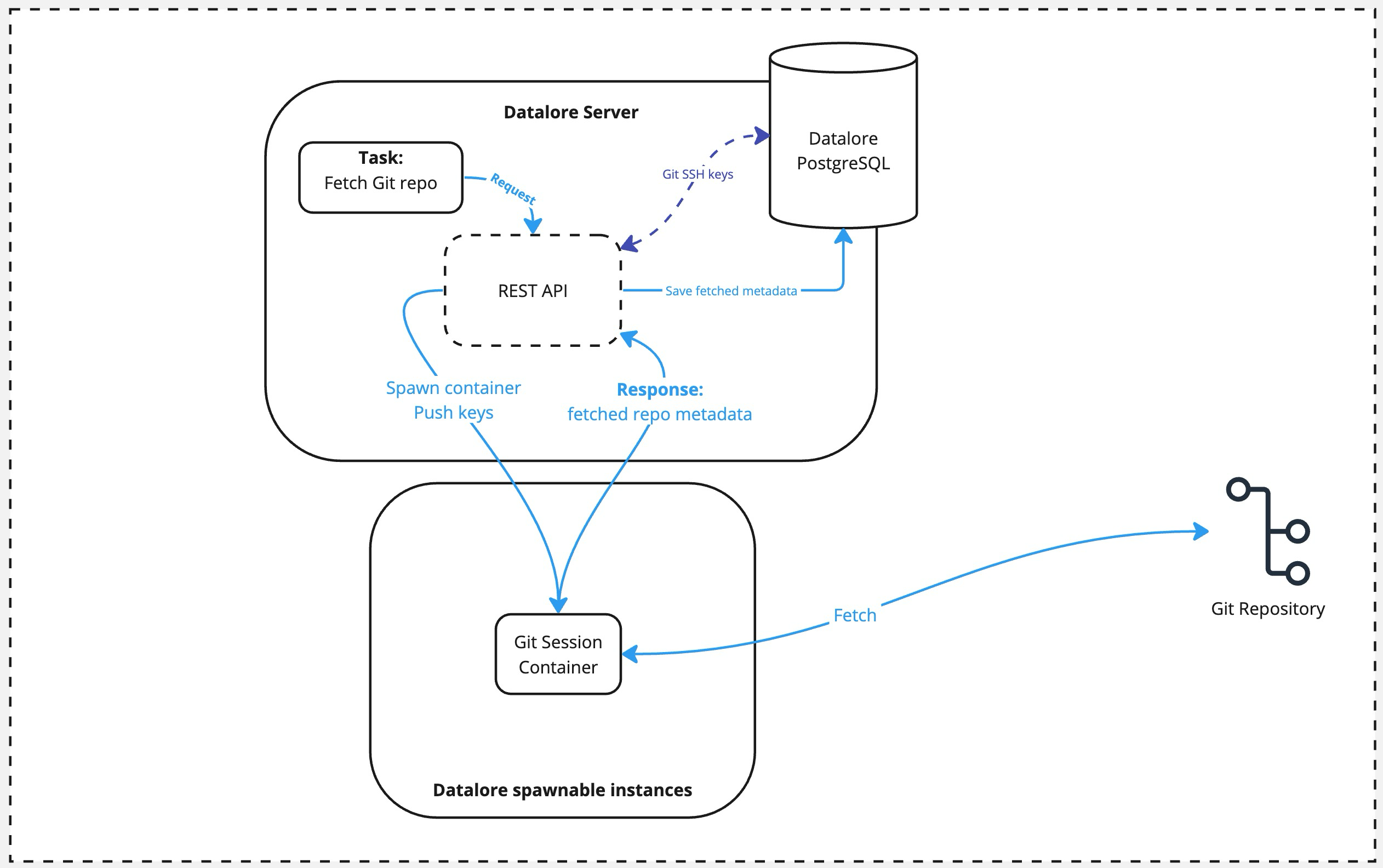

Git repo fetching data flow

An external actor calls the Git fetch task via the REST API.

Datalore spawns the Git Session container, pushing the private key used for authentication to this container.

Once container is spawned, it does the

git fetchquery against the repo provided and returns the result to the Datalore's API. The container is terminated afterward.As a final step, the fetched metadata is saved to the Datalore's database.

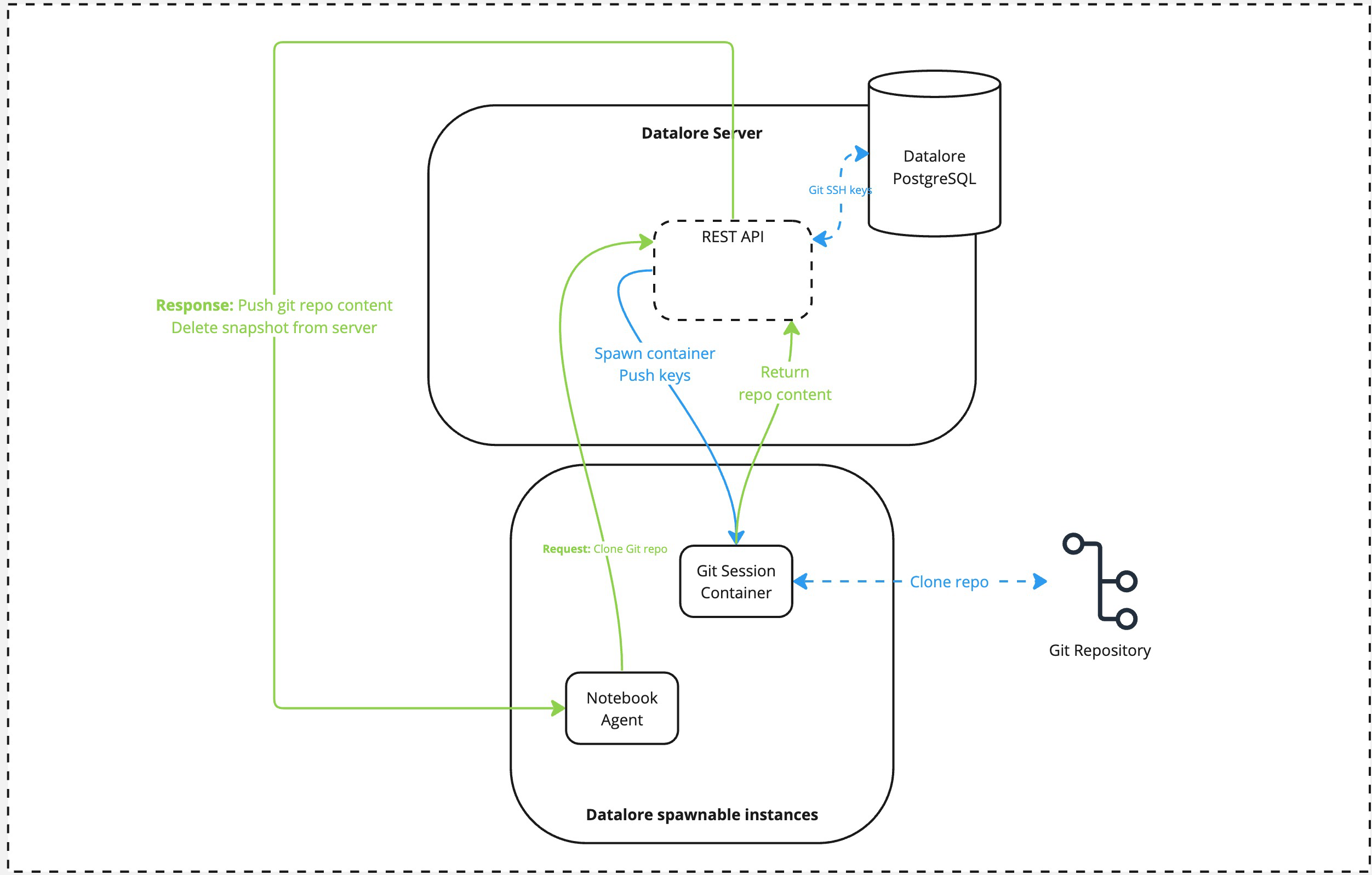

Git repo pulling data flow

An external actor calls the Git pull task via the notebook agent. The notebook agent calls the Datalore's REST API.

Datalore spawns the Git Session container, pushing the private key used for authentication to this container.

Once container is spawned, it does the

git pullquery against the repo provided and returns the tarball with the pulled repo contents to the Datalore's API. The container is terminated afterward.At this step, Datalore pushes back the tarball to the notebook agent. The tarball is deleted from the server once the operation is complete.