Install on a Kubernetes cluster using Helm charts

The instructions in this article describe the installation of Datalore On-Premises on a Kubernetes cluster using Helm.

The chapters in this section describe the processes of installing, configuring, and updating Datalore On-Premises in Kubernetes deployment (Helm charts method).

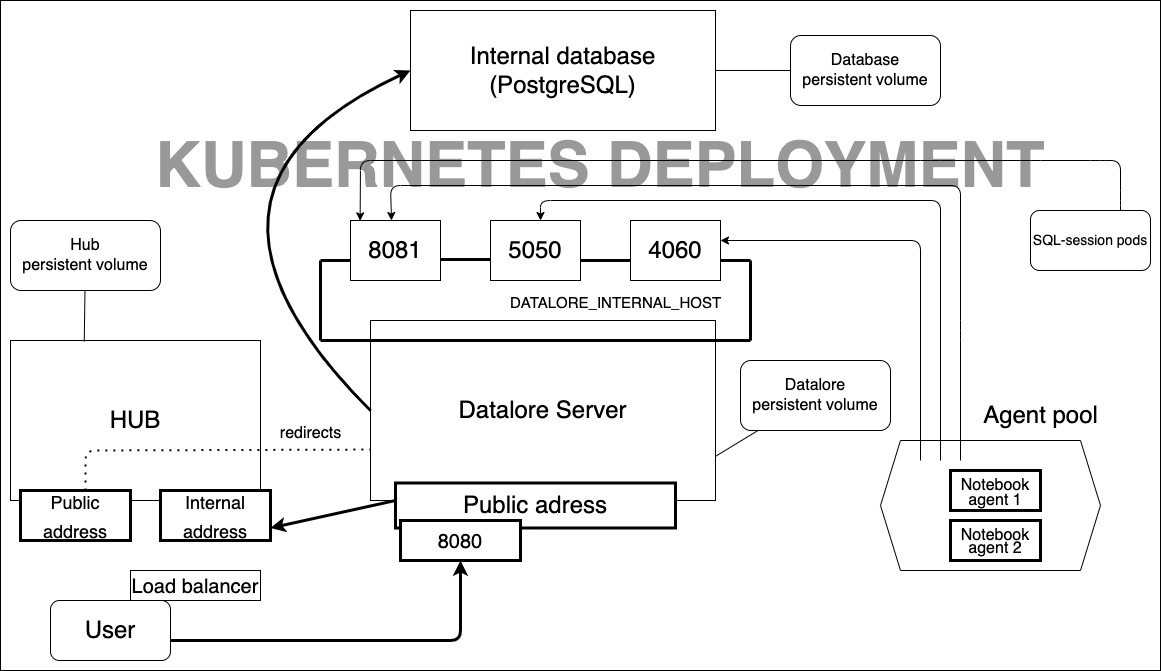

This is what Kubernetes-based setup for Datalore looks like:

You will learn how to do the following:

Basic installation: You complete the basic procedure to get Datalore On-Premises up and running on the infrastructure of your choice.

Required and optional configuration procedures: You customize and configure Datalore On-Premises. Some of these configurations are essential for you to start working on your projects.

Upgrade procedure: You upgrade your version of Datalore On-Premises. We duly notify you of our new releases.

warning

Currently, we only support 64-bit Linux as a host system. ARM-based platforms, MacOS and Windows are not supported at the moment.

- Prerequisites

Before installation, make sure that you have the following:

Kubernetes cluster

kubectlinstalled on your machine and configured to work with this clusterHelm

This installation was tested with Kubernetes v1.30 and Helm v3.17, but other versions may work too.

note

If you use a reverse proxy, we recommend that you enable gzip compression by following this instruction.

Datalore's Reactive mode may not operate properly on an Amazon EKS cluster with the Amazon Linux (default option) compute nodes. We recommend that you use Ubuntu 22.04 with the corresponding AMIs specifically designed for the EKS.

Here are our tips for AWS EKS deployments:

To find an AMI for manual setup, follow this link and select your option based on the cluster version and region.

To configure the cluster deployment using Terraform, you can refer to this sample file.

Follow the instruction to install Datalore using Helm.

Add the Datalore Helm repository:

helm repo add datalore https://jetbrains.github.io/datalore-configs/chartsCreate a datalore.values.yaml file. This file will be used later as a source of truth for the Datalore configuration. Therefore, we advise to put this file under the version control.

Add the dataloreEnv block to the file as follows, replacing the value between the quotes with the actual endpoint you're planning to access Datalore with.

dataloreEnv: DATALORE_PUBLIC_URL: "...."tip

Make sure the URL does not contain a trailing slash.

Create a Kubernetes secret for storing the database password securely.

Generate the password and store it in the Kubernetes secret, as described below. The

pwgentool is used here as an example. You can use any other tool or method to generate a password.$PASSWORD=$(pwgen -N1 -y 32) kubectl create secret generic datalore-db-password --from-literal=DATALORE_DB_PASSWORD="$PASSWORD"Modify (or add, if not present yet) the

databaseSecretblock in yourdatalore.values.yamlas follows:databaseSecret: create: false name: datalore-db-password key: DATALORE_DB_PASSWORDThe value of the name value is referring to a secret name defined at the previous step, while the key value is referring to the key within the secret that contains the password.

tip

If, for any reason, you do not want to create a secret manually, you may specify the password in the Helm config file. In this case, the secret will be provisioned automatically - but keep in mind that the password will be stored in plain text in your configuration file.

In that scenario, adjust the

databaseSecretblock in datalore.values.yaml, as follows:databaseSecret: create: true password: xxxx(Optional) If you are moving from plain text password storage to the secret reference: remove the

passwordkey with its value from thedatabaseSecretblock.Proceed based on whether this is your fresh deployment or Datalore is already installed.

Fresh deploymentDatalore is already installedProceed with the installation. No further action is required.

Apply the configuration

warning

If you proceed with this step, the Datalore server will restart.

helm upgrade --install -f datalore.values.yaml datalore datalore/datalore --version 0.2.28

Datalore requires a PostgreSQL database (with a version no lower than Postgres 15) to operate.

If you want to use the built-in Postgres database shipped with Datalore, skip this step, as by default, the Helm chart used for Datalore's deployment provisions a single-instance PostgreSQL database.

However, if you want to use an externally-configured database, add a new parameter in datalore.values.yaml as follows:

internalDatabase: falseAdditionally, the database connection string should be specified explicitly by adjusting the

dataloreEnvblock defined previously, as follows:dataloreEnv: # any other previously defined parameters DB_USER: "<database_user>" DB_URL: "jdbc:postgresql://[database_host]:[database_port]/[database_name]"note

It is also possible to use a custom JDBC driver to connect to Datalore's database. See Using custom Postgres driver for further guidance.

Datalore requires at least two Kubernetes persistent volumes for its operation. These volumes will be used to store the attached files of the notebooks and all other outputs produced by the notebooks.

In datalore.values.yaml, add the following parameters:

volumeClaimTemplates: - metadata: name: storage spec: accessModes: - ReadWriteOnce resources: requests: storage: 120Gi - metadata: name: postgresql-data spec: accessModes: - ReadWriteOnce resources: requests: storage: 10GiRun the following command and wait for Datalore to start up:

helm install -f datalore.values.yaml datalore datalore/datalore --version 0.2.28note

ImportantYou can run

kubectl port-forward svc/datalore 8080to test if Datalore can start up. However, to make it accessible, make sure you configure ingress and install the corresponding ingress controller prior to Datalore deployment.Below is a plain http ingress setup example:

ingress: enabled: true hosts: - host: datalore.mycompany.com paths: - path: / pathType: PrefixAlso, when using ingress, use this annotation to adjust file size in your configuration.

Go to URL defined at the first step, and sign up the first user. The first signed-up user will automatically receive admin rights.

tip

Unless the email service is configured, there is no registration confirmation. You can log in right after providing the credentials.

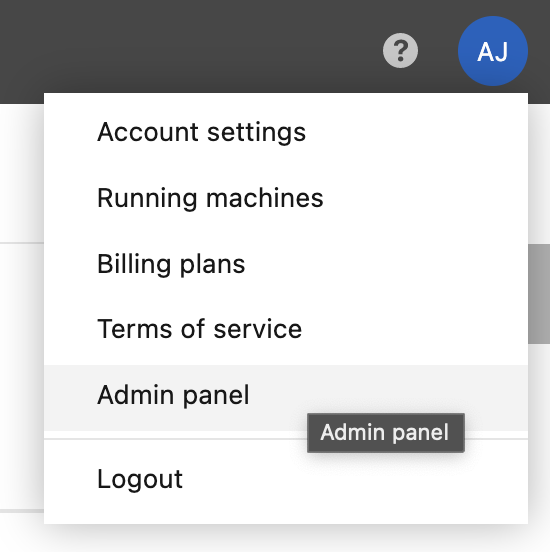

Once logged in, the license should be installed. Click your avatar in the upper right corner, select Admin panel | License and provide your license key.

For more information about Datalore licensing, see this article

To deploy the Datalore server into a non-default namespace, run the following command:

helm install -n <non_default_namespace> -f datalore.values.yaml datalore datalore/datalore --version 0.2.28To specify the non-default namespace for your agents configs, define the

namespacevariable in datalore.values.yaml as shown in the code block below:agentsConfig: k8s: namespace: <non_default_namespace_name> instances: ...Find more details about configuring agents in this topic

Under

dataloreEnvin datalore.values.yaml, define the following variables:Name

Type

Default value

Description

DATABASES_K8S_NAMESPACEString

defaultK8s namespace where all database connector pods will be spawned.

GIT_TASK_K8S_NAMESPACEString

defaultK8s namespace where all Git-related task pods will be spawned.

Find the full list of customized server configuration options in this topic.

Enable a whitelist for new user registration. Only users with emails entered to the whitelist can be registered.

Open the values.yaml file.

Add the following parameter:

dataloreEnv: ... EMAIL_ALLOWLIST_ENABLED: "true"

The respective tab will be available on the Admin panel.

By default, all Hub users can get registеred unless you disable registration on the Admin panel. If you want to grant Datalore access only to a specific Hub group members, perform the steps below:

Open the values.yaml file.

Add the following parameter:

dataloreEnv: ... HUB_ALLOWLIST_GROUP: 'group_name', 'group_name1'

While Datalore can operate in Fargate, be aware of the following restrictions:

Attached files and reactive mode will not work due to Fargate security policies.

Spawning agents in privileged mode, as set up by default, is not supported by Fargate.

Fargate does not support EBS volumes, our default volume option. Currently, as a workaround, we suggest that you have an AWS EFS, create

PersistentVolumeandPersistenVolumeContainerobjects, and edit the values.yaml config file as shown in the example below:volumeClaimTemplates: - metadata: name: postgresql-data spec: accessModes: - ReadWriteMany storageClassName: efs-sc resources: requests: storage: 2Gi - metadata: name: storage spec: accessModes: - ReadWriteMany storageClassName: efs-sc resources: requests: storage: 10Gi

Follow the basic installation with configuration procedures. Some of them are required as you need to customize Datalore On-Premises in accordance with your project.

Procedure | Description |

|---|---|

Required | |

Used to change the default agents configuration | |

Used to enable GPU machines | |

Used to customize plans for your Datalore users | |

Optional | |

Used to create multiple base environments out of custom Docker images | |

Used to integrate an authentication service | |

Used to enable a service generating and distributing gift codes | |

Used to activate email notifications | |

Used to set up auditing of your Datalore users | |

We also recommend referring to this page for the full list of Datalore server configuration options.

Datalore installation, Datalore deployment, install Datalore, installation procedures, installation requirements, Kubernetes deployment